Key highlights:

- OpenAI secured long-term DRAM wafer supply deals through 2029, covering an estimated 40% of global output.

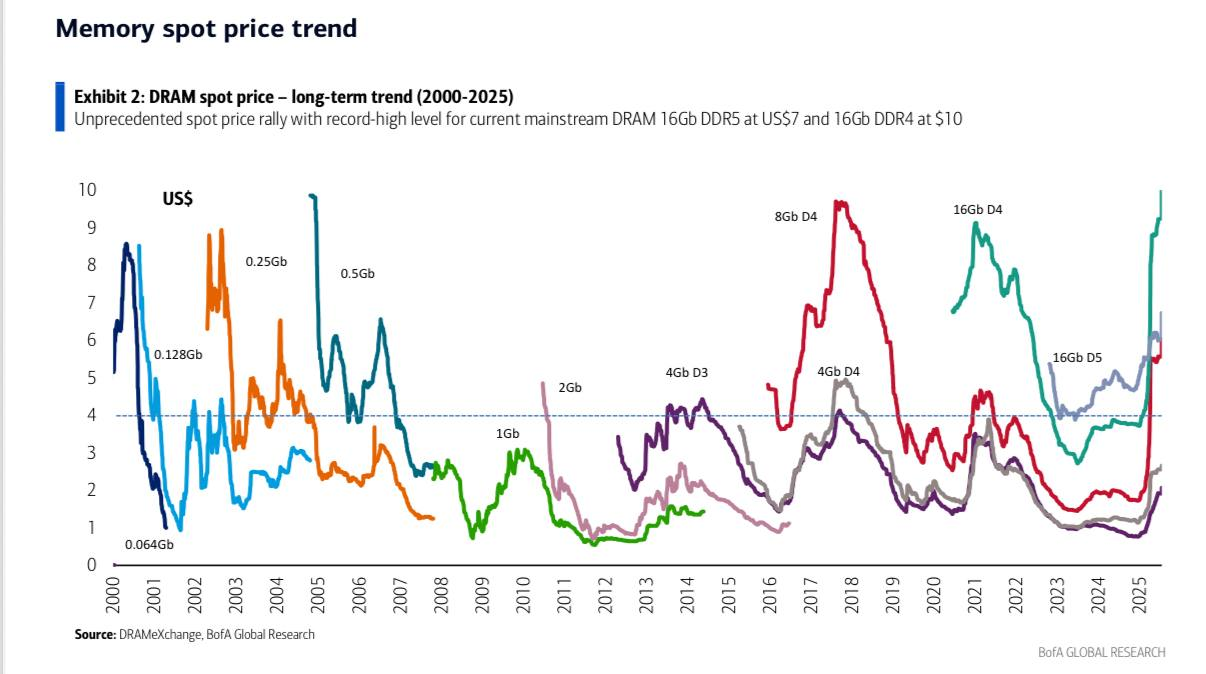

- Memory prices have surged as manufacturers redirect supply toward AI and enterprise customers.

- Analysts warn that relief may not arrive until new DRAM capacity comes online after 2027.

OpenAI has secured unprecedented long-term supply agreements with Samsung Electronics and SK Hynix, locking in deliveries of up to 900,000 DRAM wafers per month through 2029, according to industry sources. The volume is estimated to account for roughly 40% of global DRAM wafer output, a scale that has already begun reshaping the global memory market.

The agreements prioritize OpenAI’s access to raw memory wafers rather than finished modules, giving the company preferential treatment at a time when demand for AI-focused infrastructure is surging. Analysts say the move has tightened supply for other buyers and accelerated price increases across consumer and enterprise segments.

Inside the unprecedented AI memory agreement

The contracts were reportedly finalized in October 2025, following meetings in Seoul between OpenAI CEO Sam Altman, South Korean President Yoon Suk Yeol, Samsung Chairman Jay Y. Lee, and SK Group Chairman Chey Tae-won.

While discussions around AI infrastructure were public, the full scale of the memory commitments only became widely known after the agreements were disclosed.

Why wafers matter more than finished memory

Industry experts estimate the broader memory market linked to the deal at $60 billion to $90 billion annually. By purchasing wafers directly, OpenAI effectively moves ahead of other customers in the production queue, ensuring priority allocation during a period of constrained supply.

The wafers will be used to manufacture both DDR5 DRAM for conventional servers and high-bandwidth memory (HBM) designed for AI accelerators.

These volumes are closely tied to Stargate, OpenAI’s reported $500 billion data-center initiative, developed in partnership with Oracle and SoftBank, aimed at building a global AI computing network.

Prices rise as supply tightens across the market

Since the agreements were finalized, prices for consumer DDR5 memory have surged. Market data shows average retail prices rising two to three times within weeks, with some modules increasing by more than 150% in under a month. Delivery timelines for large enterprise buyers have stretched to 10-14 months, with certain orders pushed into late 2026.

Dynamic RAM (DRAM) price dynamics. Source: Jukan

Memory manufacturers have responded by redirecting supply toward higher-margin corporate contracts. Micron has announced plans to significantly reduce its consumer memory and SSD offerings in favor of enterprise and AI-focused products.

As a result, high-capacity modules such as 96GB and 128GB RDIMMs are increasingly scarce, while smaller 64GB modules often fall short for modern AI workloads.

Skepticism and long-term implications

Some industry veterans remain cautious. Former Micron engineer Dave Eggleston has questioned the durability of multi-year DRAM supply commitments, noting that such agreements are often renegotiated as market cycles shift. He also argues that major suppliers are unlikely to allow any single customer to exert leverage over strategic partners such as Nvidia.

Still, the immediate impact is clear. Samsung and SK Hynix together control roughly 70% of global DRAM production and about 80% of the HBM market. Their increasing focus on AI workloads suggests prolonged constraints for other buyers. Analysts do not expect meaningful price relief before 2027, when new fabrication plants (planned between 2026 and 2030) begin reaching scale.

For consumers, the shift points to a prolonged period of higher memory prices and limited choice, while reinforcing how AI companies are becoming the dominant force shaping global semiconductor supply chains.

Source:: AI Workloads Are Set to Consume Over 40% of Global RAM Output by 2029