Key highlights:

- Massive AI infrastructure spending is redirecting memory and energy resources away from consumer technology markets.

- Rising demand for specialized AI hardware is pushing RAM, SSD, and PC prices significantly higher worldwide.

- Power grid limits and long connection delays are becoming a critical bottleneck for future AI expansion.

The rapid expansion of large-scale AI infrastructure is no longer confined to data centers and balance sheets. It is reshaping global hardware, energy, and consumer technology markets and ordinary users are increasingly feeling the impact.

What began as an aggressive race for AI computing power has evolved into a broader market disruption, driving memory shortages, energy bottlenecks, and rising prices across the tech ecosystem.

The financial model driving the infrastructure surge

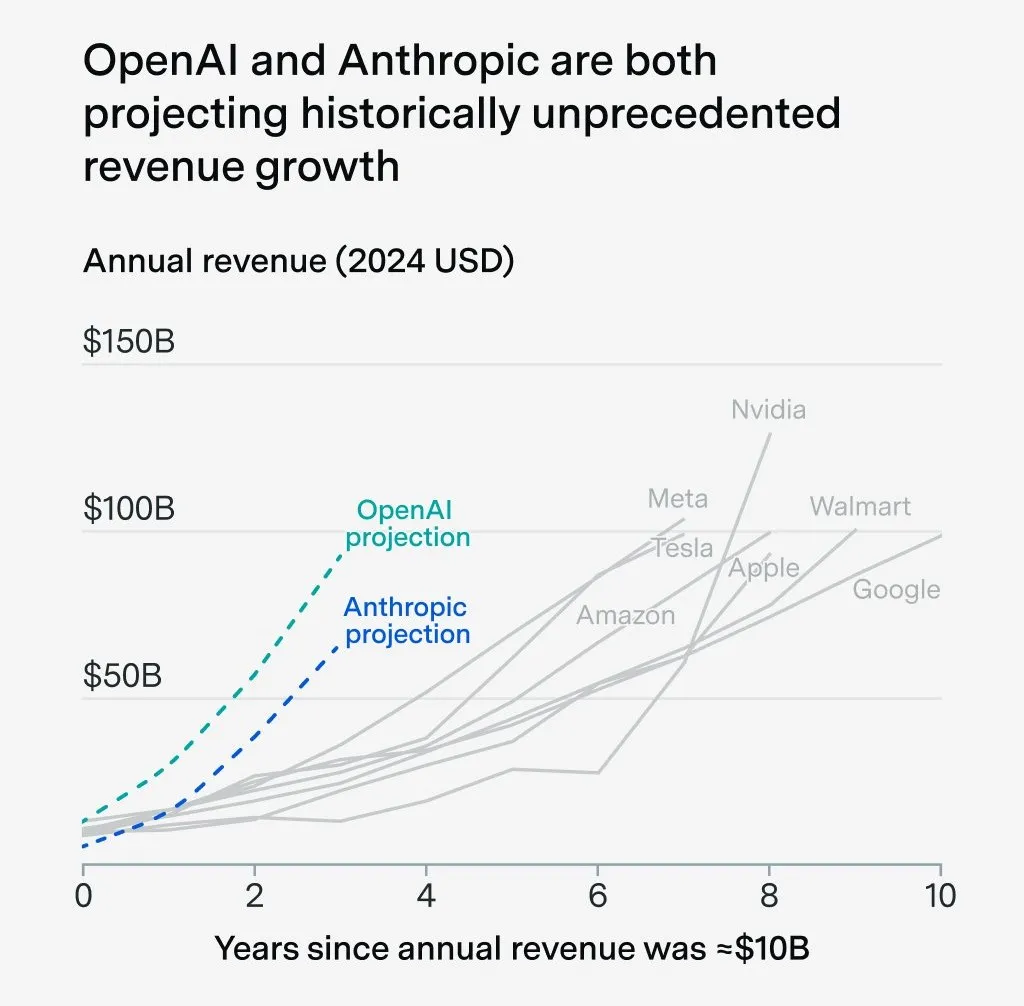

At the center of the disruption lies an aggressive investment strategy. OpenAI is reportedly losing around $12 billion per quarter, while generating approximately $20 billion in annual revenue. Despite a user base of roughly 800 million, only about 5% are paying customers, resulting in a critically low monetization rate.

Source: Epoch AI

Instead of slowing expansion or cutting costs, the company has escalated infrastructure spending. Billions of dollars are being poured into data centers, chips, and long-term supply agreements in an effort to secure future capacity and maintain competitive advantage.

One of the most striking examples is OpenAI’s reported agreements to purchase up to 900,000 DRAM wafers per month, representing nearly 40% of global DRAM wafer production. This scale of procurement is less about immediate operational need and more about locking down scarce resources before competitors can access them.

Why memory has become the bottleneck

High-performance AI workloads depend heavily on specialized memory such as HBM. Manufacturing HBM is far more resource-intensive than standard DRAM: producing one bit of HBM can displace up to three bits of conventional DRAM due to capacity reallocation.

As a result, major manufacturers like Samsung and SK Hynix are redirecting production lines toward higher-margin enterprise memory. This has triggered a cascading shortage in mass-market components.

The effects are already visible:

- RAM prices have risen by 50–60% per quarter in some regions.

- SSD prices have increased two- to threefold, with 1–2 TB drives jumping from $200 to $500–700.

- Consumer-grade components are increasingly deprioritized in favor of data center contracts.

This is not a short-term correction, but a structural shift in how memory is produced and allocated.

Energy constraints and consumer market fallout

The crisis extends beyond chips and memory. Power availability has become a critical limiting factor for AI expansion. To bypass grid constraints, OpenAI has purchased 29 gas turbines with a combined capacity of 986 megawatts, effectively building private power generation to support data center operations.

In some regions, waiting times for new grid connections now reach up to seven years, while AI hardware refresh cycles typically last only four to six years. This mismatch means that some expensive infrastructure risks becoming obsolete before it is fully operational.

Meanwhile, consumer markets are absorbing the downstream impact. The gaming industry has been hit particularly hard:

- Xbox Series sales have fallen by 70%

- PlayStation 5 sales have declined by 40%

- Building a mid-range PC has become 15–20% more expensive

As manufacturers earn more from enterprise and AI-focused products, consumer electronics are becoming a secondary priority.

A structural shift, not a temporary spike

The current situation reflects a deeper transformation rather than a temporary imbalance. Data centers are now the primary customers, while consumers are increasingly priced out of hardware upgrades.

Analysts estimate that for OpenAI to break even, subscription prices may need to rise from $20 per month to $250-500 per month, a level that would fundamentally alter the accessibility of AI services.

Historically, severe component shortages, such as the DRAM crisis of 1999–2000, when prices surged three- to fourfold have eventually spurred innovation. The current crunch could accelerate the development of alternative memory architectures, more efficient data compression, or leaner AI models that require fewer resources.

For now, however, the industry is locked in a strategic impasse. Infrastructure costs are rising faster than revenues, and the race for AI supremacy is reshaping markets far beyond artificial intelligence itself. The question is no longer whether consumers will feel the impact, but how long the system can sustain this trajectory.

Source:: OpenAI vs. Everyone: The Memory Crunch, Energy Chaos, and Price Surges